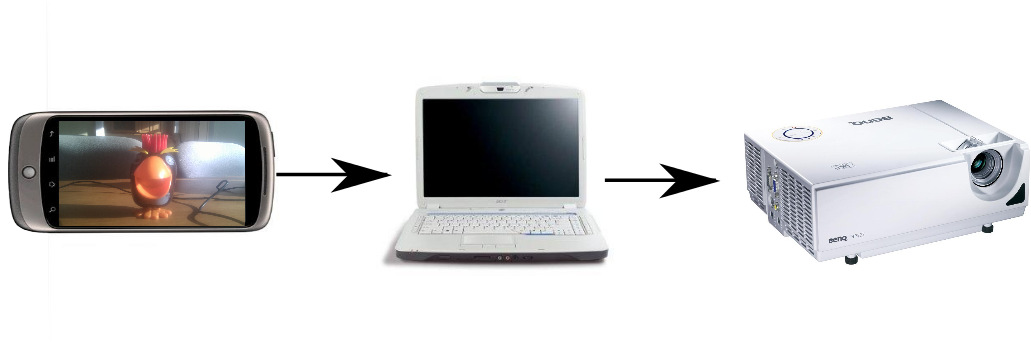

We needed a method of transfering what the camera on an Android device was seeing to another device (a laptop connected to a projector, to be precise).

So we created Peepers which does just that.

Try out the demo, view the slides and take a look at the source.

The problem

We wanted to stream video from a phone to a laptop to a projector.

Pictures represent the actual models we used. Except years of abuse give our own a post-industrial feel.

Our scope was

-

No audio, as we would be streaming in the same room

-

It would be running on a local WiFi network

-

Bandwidth not a problem

-

Jitter would be negligible

-

What's out there already?

Taking a look around at what was already available, there are a few Android applications that can stream video from the camera across a network.

Open source apps

Close source

VLC was used as the client, with network caching turned off to reduce latency

$ vlc --network-caching 0 <src>

We found that we were getting around 3 seconds latency with the apps we tried out. However, from pinging the device we knew the time it took to send a packet to and from the device was ~300 milliseconds - our goal was to close that gap.

Stripping down to the MediaRecorder

Going through the spydroid code, stripping out the parts we didn't require (such as the flatulence feature), we learnt the trick that is used to do live streaming on Android.

There is no access to the camera stream via the Android SDK/NDK. Instead, to get encoded video, one must instead use a MediaRecorder and send its output to the network.

MediaRecorder recorder = new MediaRecorder();

recorder.setVideoSource(MediaRecorder.VideoSource.DEFAULT);

recorder.setOutputFormat(MediaRecorder.OutputFormat.MPEG_4);

recorder.setVideoEncoder(MediaRecorder.VideoEncoder.H263);

recorder.setOutputFile(outputFile);

recorder.setPreviewDisplay(holder.getSurface());

recorder.prepare();

recorder.start();

// ...

recorder.stop();

However, the problem is that the video is now only accessible after stop()

returns - the lower bound of our latency is now the length of the recorded

video!

Tricking the MediaRecorder

Using a LocalSocket, instead of a File, we can start taking data out as soon as the MediaRecorder puts it in.

LocalServerSocket server = new LocalServerSocket("some name");

receiver = new LocalSocket();

receiver.connect(server.getLocalSocketAddress());

receiver.setReceiveBufferSize(BUFFER_SIZE);

LocalServerSocket sender = server.accept();

sender.setSendBufferSize(BUFFER_SIZE);

// ...

// Give the MediaRecorder our fake file

recorder.setOutputFile(sender.getFileDescriptor());

But what's this rubbish we've been handed?

The MediaRecorder gives us a file, not a stream.

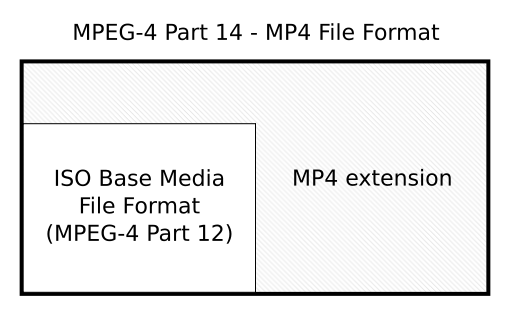

Source: Wikipedia.

Specifically, an MPEG-4 file. MPEG-4 is a container format, made up of a collection of boxes. Boxes have inside them meta-data, such as information about timestamps and frame rates, and also the actual video and audio streams (if any).

Extracting video from an MPEG-4 file

Using a hacky-heuristic, that has yet not to work, skipping over all the data

before the mdat box, lets us get to the beginning of the video stream.

byte[] mdat = { 'm', 'd', 'a', 't' };

byte[] buffer = new byte[mdat.length];

do

{

fillBuffer(buffer);

} while (!Arrays.equals(buffer, mdat));

Over the network and far away

Sending over the network requires us to make a choice about what low-level protocol we want to use.

TCP

Like Royal Mail signed for delivery, with TCP you have a guarantee that the packet was recieved, which is important as you don't want that carefully crafted tweet about how much you despise Monday mornings to be courrupted in transit.

UDP

Fire and forget about that packet ever bothering you again. It may seem a tad harsh, however this is very useful when we don't mind losing a packet. In terms of streaming live video, we aren't interested in old frames - we only want the latest ones.

Raw H.263 over UDP

We chose to try the raw video stream the MediaRecorder was writing out over UDP.

Transcoded to OGG for browser playback. Download the original.VLC magically played it (well, it was able to play H.263, it didn't seem to do anything when we handed it raw H.264). However, we were seeing the same 3 seconds of latency as before.

Enter RTP

Thank heavens, another protocol! RTP allows us to send information about the media that we are sending, such as the codec, source and timestamps.

However, it is these timestamps that cause problems. As the MediaRecorder is the only one who knows the true timestamps of when the frames were taken, and it only writes this data once it has finished recording (which is never in our case), we have to guess for ourselves what the timestamp should be. Which, in our early implementations, resulted in some unique video.

Transcoded to OGG for browser playback. Download the original.Tracing the latency

The latency was still awfully high. We decided to experiment with different parameters, such as bit rate, quality, resolution - all to no avail.

We opined the latency must be due to waiting for the MediaRecorder to do its encoding and decide to write to file.

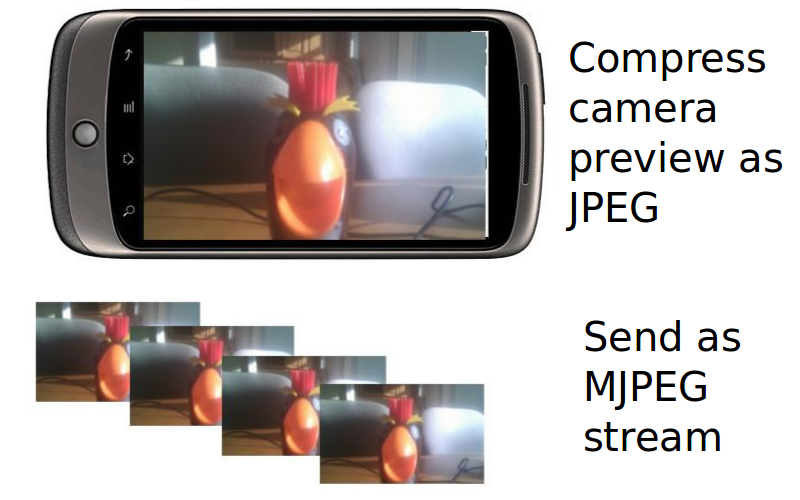

Paradigm shift to Motion JPEG

The preview surface that you have to supply to access the camera is updated in real-time. Why not just send the images from that?

Motion JPEG (MJPEG) a video format where the data is literally one JPEG after the next, each representing a frame, not the most efficient representations of video data - but we are interested in minimising latency, not bandwidth.

So the new plan is to get a preview image, compress it to a JPEG and then send that to the network.

Streaming the camera preview

We create a PreviewCallback and in the callback we recieve the bytes representing the preview image and then create a JPEG image.

Camera.PreviewCallback callback = new Camera.PreviewCallback()

{

public void onPreviewFrame(byte[] data, Camera camera)

{

// Create JPEG

YuvImage image = new YuvImage(data, format, width, height,

null /* strides */);

image.compressToJpeg(crop, quality, outputStream);

// Send it over the network ...

}

};

camera.setPreviewCallback(previewCallback);

We then push the JPEGs out over RTP.

Transcoded to OGG for browser playback. Download the original.The latency was down initially, however we were getting unreliable playback using VLC and found that the latency was increasing over time.

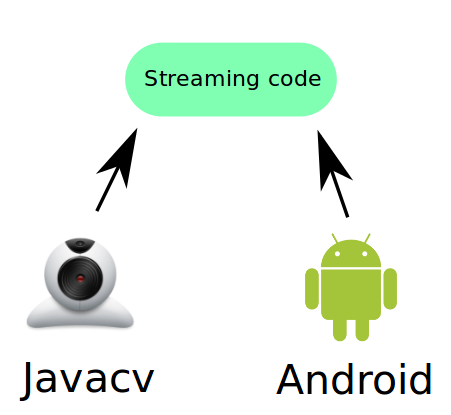

Non-Android testing

In our quest to debug our code, we used created non-Android tools that freed us from having to do everything on a device/emulator.

We used: wireshark to check that the 1's and 0's

were well and good; a packet sniffing Python library, pycapy,

to get the packets coming in and try to rebuild our JPEGs and javacv

to create a local webcam streamer so we could get live video to easily assess

the latency.

Webcam icon by ~kyo-tux. The Android robot is reproduced or modified from work created and shared by Google and used according to terms described in the Creative Commons 3.0 Attribution License.

Final form - MJPEG over HTTP

We had our reservations about using HTTP instead of UDP, as we thought of the extra overhead of a TCP connection.

Our non-Android tools allowed us to rapidly experiment with MJPEG over HTTP (took around half an hour to get something working). From there we could see that it gave the same low latency results - and even better - would playback in the browser.

Transcoded to OGG for browser playback. Download the original.The latency can be seen in the difference in the image being recorded and that being shown on the screen (the screen in the recording that is).